Talks and Seminars

Since 2006 I’ve been recording my significant talks here (see also the summary list).

- The ExcaliData Implementation of Active Storage. An opportunity for Lustre?

Meeting: UK Lustre Users Group

Manchester, 06 December, 2023

Includes the following talk:

In this keynote talk I introduced the work we have been doing on active storage (also known as computational storage) as part of the ExcaliData projects within Excalibur (the UK exascale software readiness programme).

Presentation: pdf (5.3 MB)

I started with why I was there - as a climate scientist (albeit one who worries about the necessary underlying infrastructure) at a storage meeting. The reason being that co-design of our computing systems extends way beyond codes and compute engines! Which of course is why ExcaliData exists, as an excalibur cross-cutting activity to support a range of exascale exadata problems. So we began with some “climate science makes lot of numbers” slides, the point of which being that “we make lots of numbers then we have to do stuff with them”.

The concept of active storage, that is storage that can do calculations, is an old one, but our innovation here is to limit those calculations to reductions (and any necessary decompresssion of data that has already been compressed at write-time). By limiting ourselves to reductions (a la MPI) we can push the knowledge of when to do those reductions in storage into task scheduling middleware - in our case, Dask. With some minor modifications to the Dask graph, the application can be completely unchanged, but take advantage of storage based reductions if they are available (and they make sense).

We currently modify the Dask graph in cf-python - but it would be better to do it directly in Dask. We don’t do that yet because we want to have some active storage implementations in the wild so that the Dask maintainers will see this as a beneficial capability.

We currently have one fully featured implementation as S3 middleware, allowing a server adjacent to S3 storage to do the reductions (using reductionist by StackHPC) - and one prototype implementation in a POSIX file system (DDN’s Infinia).

Extending this to more POSIX environments is why I was happy to accept the invite to talk to UKLUG — and so I included some discussion about issues around implementing support in Lustre.

It turns out the hard part of doing this is recognising that most of our data is chunked, and so reductions on arrays known to the application turn out to be partial reductions on storage chunks which are then reduced further up the stack. Given each chunk may have missing data, may be compresssed and/or filtered, and we probably only want some of it, we need a carefully constructed API.

Getting Lustre to support that API is a necessary condition for active storage, but there are others which are discussed in the talk.

- Climate Science - What lies beneath?

Meeting: NCAS@Reading Weekly Seminar

Reading, 23 June, 2023

Includes the following talk:

In this seminar I laid out the background to the new ENES infrastructure strategy, including not only the computing context but a good part of the science motivation for a spectrum of climate modelling activities. Below I sketch out a summary of a few of the points I made.

Presentation: pdf (2.3 MB)

I start with an introduction to ENES (the European Network for Earth System modelling) and define infrastructure in this context. The strategy presented builds on previous strategy exercises, and involved interviewing all the major European modelling groups and reviewing a bunch of current pan-European projects.

The computing context consists of the implications of changing computing hardware, the need for new maths and algorithms, the rise and rise of machine learning, and our need to pay more attention to cost (both energy and currency costs). The consequence is that our traditioanl expectation that we could just use any computer in different ways is no longer as true as it was (if it ever was). There are going to be new ways of dealing with data workflows, with more emphasis on handling ephemeral data - which will have implications for portability of the necessary ephemeral workflows. The big consequence is that we will need to start treating big modelling projects more like satellite missions.

The scientific landscape ranges from the WCRP lighthouses and big international projects to pan-European projects and large single model ensembles. There are important scientific and technical collaborations, and we need to deal with interfaces to other scientific communities as well as the climate service community.

A big part of most projects is addressing some aspect of model or scenario uncertainty, or the impact of climate variability - it is clear that the climate we have had is only one of many possible climates we might have had, and the same applies to the future. It’s not all about statistics, some aspects of risk need to be addressed by working backwards in causal networks - and when we do that we find important roles for many different types of models and modelling approaches.

Which model we use for a given problem is very much an issue of establishing whether or not is fit or adequate for the purpose, not just whether or not it has the best representation of reality. We see that play out in the variety of different models and resolutions used for the very many different parts of CMIP6. We have a range of different climate models targeting a range of different applications, and some of those models are capable of deployment on exascale computing, and some are not.

We are very aware of the impact of model diversity on uncertainty, but we address that with a very ad hoc approach to modelling - there is certainly a lot of apparent process diversity in the European climate modelling ecosystems, but it has arisen, not been cultivated. Could we cultivate and plan our model diversity? Is it too dependent on one ocean model? Will the advent of a commuinty ice model (SI3) and more use of the FESOM2 ocean make a difference? There is huge scope to plan - on a European scale - our approach to diversity going forward rather than just hope we get the right amount.

The presentation finishes with a summary of some of the recommendations, but as they are directly linked here, I’ll not bother with that summary here, simply remind the reader that a climate infrastructure strategy has to support the necessary scientific diversity, and address all our needs, including supporting and growing our workforce.

- Data Challenges for UK (global) k-scale modelling

- a reminder of the scale of the challenge (with a very old slide showing the number of numbers a 1km global run will produce),

- a list of challenges and issues to consider,

- some pieces of Excalibur and Esiwace funded research we have been doing in this space (on aggregations an ensemble analysis, more about those another day),

- some of the conclusions of the ENES infrastructure strategy1 which address workflow, and in particular how the advent of in-flight analysis on top of large communities of downstream analysts requires us to start treating big experiments more like satellite missions than personal research projects.

-

The draft strategy is available on the IS-ENES website, and should make it to zenodo soon. ↩

Meeting: UKNCSP Strategic Workshop on High Resolution Climate Modelling

Met Office, Exeter, June 16, 2023

Includes the following talk:

This was an inaugural meeting of the UK high resolution climate modelling community under the auspices of the new UK National Climate Science Partnership.

Presentation: pdf (650 KB)

I was asked for ten minutes on data challenges at high resolution. What I chose to present here was

- Perspectives on the Future of Climate Modelling

Meeting: Baljifest

GFDL, Princeton, via Zoom, May 15, 2023

Includes the following talk:

Baljifest was a celebration of the contributions of Balaji to climate modelling at GFDL and Princeton. There was a day of presentations, followed by a panel on the future of climate modelling. Saravanan and I kicked off the panel with a presentation each.

Presentation: pdf (1.8MB)

THe obvious place to start with an exercise in clairvoyance at a meeting like this was Balaji’s own papers, but I moved rapidly to the conclusions from the science underpinning to the ENES strategy for climate modelling infrastructure (which at the time were nearly complete).

With a computing environment which is getting ever more complex the historical simplicty of a choice between spending compute on some combination of resolution/complexity/duration/ensemble-size/data-assimilation is getting hard to continue. We may need different hardware for different types of problems, not different ways of using the same hardware. We will see more complex workflows, and more emphasis on in-flight diagnostics leading to additional hardware issues.

But the main focus of this talk was on the impact of uncertainty on the future of modelling, and how we need to use many different types of models to address a combination of model uncertainty, internal variability, and scenario uncertainty. In doing so, we recognise that there is a lot of effort on understanding processes that is hidden when we talk of CMIP, and the full spectrum of climate modelling is necessary - not all of which is suitable for exascale computing.

Whatever we do, we need to plan our interactions with model diversity to unlock our understanding of model uncertainty, and not just hope for the best. There is plenty of evidence that it is necessary, but we cannot continue with ad hoc approaches. There is a finite community who can put the necessary effort into maintaining models which can span the proliferation of hardware we face. And, in thinking about model diversity, we need to realise that we must not always strive for the models with the best representation of reality, because they may not be the best for a given task - but whatever we use, we need to know if is adequate for purpose.

- Generic lessons from data management in support of climate simulation workflows

Meeting: UK Turbulence Consortium, 2023 Annual Meeting

Imperial College, London, March 26, 2023

Includes the following talk:

An invited talk to the UK turbulence consortium which aimed to describe some of the activities the climate science commmunity has undertaken, and is undertaking, to deal with the data deluge.

Presentation: pdf (13 MB)

This is a talk in three parts, covering some motivation for investing in data management, some new developments underway to deal with high volume data, and a reminder of the importance of FAIR.

Part one aims to introduce other scientific communities to why and how climate science uses data management in delivering model intercomparison. Using examples from how the IPCC process uses CMIP data, there is a walk through some of the technology underpinning CMIP6 (the CF conventions, data analysis stacks, ESGF etc), and a description of some of the (especially European) data delivery. There is a good deal of motivation for why we do model intercomparison and how it both delivers and supports projections using scenarios. Examples of how high level software stacks remove labour from scientists are shown.

Part two describes some of the work being done in our Excalibur cross-cutting data projects, targeting those pieces most relevant to meeting attendees: Standards based Aggregation, Active Storage, and support for in-flight ensemble analysis.

Part three concludes the talk with a short discussion of how FAIR applies to simulation data.

- WP4 Data at Scale

- We showed some great results from our use of XIOS for ensembles, with scaling results from a one-hundred member N96 system and sixteen-member N512 system. We’re convinced that when we do this for real for high-resolution models the extra work to do the ensemble diagnostics will be lost in the noise. We have more work to do to do it for real though, including setting up real diagnostics for each member, and the new ensemble diagnostics. We’ve got a cool new CYLC system to handle an ensemble member crashing during the run.

- We also showed our plans for moving forward with semantic storage technologies as part of both the rest of ESiWACE2 and ExCALIBUR. There will be work on a new tape and object store interface for deployment on HPC platforms remote from the storage and analysis platform (e.g. for use on ARCHER2 writing to JASMIN), and on our new aggregation syntax (of which much more in the future).

Meeting: ESiWACE2 2021 General Assembly

Gotomeeting, September 27, 2021

Includes the following talk:

An update on progress in the ESiWACE2 fourth work package, WP4, data (systems) at scale.

Presentation: pdf (4 MB) </a>

We discussed the WP4 objectives and our progress on three different pieces of technology: support for in-flight ensemble diagnostics, semantic storage tools, and the Earth System Data Middleware (ESDM). I gave a description of the first two, and Julian described the ESDM and accompanying activities.

For my bits:

- Digital Twin Thinking for HPC in Weather and Climate

Meeting: 19th Workshop on high performance computing in meteorology

Zoom, September 20, 2021

Includes the following talk:

Presentation: pdf (0.3MB)

Digital twins are defined in many ways, but definitions usually have some combination of the words “simulation of the real world constrained by real time data”. These definitions basically encompass our understanding of the use of weather and climate models whether using data assimilation or tuning to history. It would appear that we (environmental science in general and weather and climate specifically) have been doing digital twins for a long time. So what then should new digital twin projects in our field aim to do that is different from our business as usual approach of evolving models and increasing use of data?

There seem to be two key promises associated with the current drive towards digital twinning: scenario evaluation and democratising access to simulations. To make progress we can consider two specific exemplar questions: (1) How can I better use higher-resolution local models of phenomenon X driven by lower-resolution (yet still expensive large-scale) global models?; and (2) How can I couple my socio-economic model into this system?

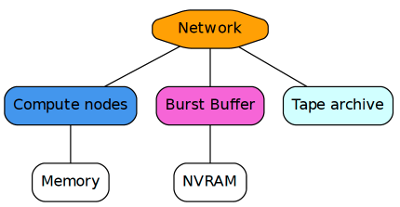

Delivering on these promises, even for just these two exemplars, requires some additional thinking beyond what we do now, and such thinking can take advantage of our changing computing environments, and in particular our use of tiered memory and storage, so that the burden of supporting these digital twin applications does not come with unaffordable complexity or cost in the primary simulation environment. It will also require much more transparency and community engagement in the experimental definition. In this talk I outline some of the possibilities in this space, building from existing technologies (software and hardware), models, and infrastructure (existing systems), and suggest some practical ways forward for delivering on some of the digital twin promises, some of which might even be possible within existing programmes such as the EC’s Destination Earth (DestinE).

This talk was part of the 19th Workshop on high performance computing in meteorology, and there is a recording available (not that I recommend listening all the way to the questions which I didn’t handle very well; I was rather tired when I gave this talk).

- ExCALIBUR for ESCAPE

Meeting: ESCAPE-2 Final Dissemination Workshop

Zoom, September 03, 2021

Includes the following talk:

ExCALIBUR for ESCAPE

Presentation: pdf (1.7MB)

The Exascale Computing ALgorithms & Infrastructures for the Benefit of UK Research (ExCALIBUR) programme is a five year activity with two delivery partners: the Met Office(for PSREs amd the NERC community) and the Engineering and Physical Sciences Research Council (EPSRC). The aim of the project is to redesign high priority simulation codes and algorithms to fully harness the power of future supercomputers, keeping UK research and development at the forefront of high-performance simulation science.

This talk (part of the ESCAPE-2 Final Dissemination Workshop) outlines key aspects of the programme, including a summary of the priority use cases with a bit more detail about the environmental science projects (which are all related to the next generation modelling system which will replace the current Unified Model). Some cross-cutting areas are listed and there is a summary of our ExCALIData project (see my last talk).

- From ESiWACE to ExCALIDATA

- An Introduction to ExCALIDATA

- support for optimal (performant) use of a given system’s storage configuration (portable) for their particular application without having to know the details of the system configuration (productive) in order to configure their application; and

- deliver a completely new paradigm for where certain computations are performed by reducing the amount of data movement needed, particularly in the context of ensembles (but implicitly, make sure that this can be done productively, and results in portable code which is optimally performant in any given environment).

- storage interfaces, and our our idea of semantically interesting “atomic_datasets” as an interface to multiple constituent data elements (quarks) distributed across storage media.

- how best we can use fabric and solid state storage. There are a plethora of technology options, but how can we deploy some of them for real? Which ones?

- A comparison of I/O middleware (including the domain specific ESDM, and the generic ADIOS2)

- Active Storage in software, and

- Active Storage in hardware

- Extending I/O server functionality that we developed in ESiWACE for “atmosphere only” workflows for coupled model workflows.

Meeting: Benchmarking for ExCALIBUR Update

Zoom, September 02, 2021

Includes the following talks:

From ESiWACE to ExCALIData

I gave a talk to the ExCALIBUR benchmarking community about the work we plan to do in the ExCALIBUR programme. This new activity is called ExCALIData, and really comprises two projects ExCALIStore and ExCALIWork. It is “cross-cutting” work funded as part of the Met Office strand of ExCALIBUR to deliver cross-cutting research in support of all programme elements (see the presentation in my next post for details). The entire programme is a national programme with two funding strands, one for public sector research establishments and the NERC environmental science research community, and one delivered by EPSRC for the rest of the UKRI community.

Excalibur itself is a multi-million pound multi-year project which aims to deliver software suitable for the next generation of supercomputing at scale - the “exascale” generation. The two ExCALIData projects have been funded in response to a call which essentially asked for two projects which addressed:

The call asked for particular application to any one of the existing Excalibur use cases but a plan for how it might be applicable to others. We of course addressed climate modelling as our first use-case, but in doing so aimed to address a couple of other use cases and build some generic tools.

We were in a good place to bid for this work building on the back of our EsIWACE activities on I/O and workflow, so the talk I gave to the benchmarking community was in two halves: the first half addressed the problem statement and some of the things we have been doing in ESiWACE, and the second described the programme of work we plan in Excalibur.

From EsiWACE to ExCALIBUR

Presentation: pdf (1.6MB)

This was a short motivation for our particular problem in terms of massive grids of data from models and how increasing resolution leads (if nothing is done) to vastly more data to store and analyse. The trends in data volumes match the trends in computing and will lead to exabytes of simulation data before we hit exaflops. Our exabytes are a bit more difficult to deal with (in some ways) than Facebook’s, but like all the big data specialists, as a community we are building our own customised computing environments - in this case we are specialising the analysis environment sooner than we have specialised the simulation platform (but that too is coming).

In WP4 of EsiWACE(2) we have been working to “mitigate the effects of the data deluge from high-resolution simulations”, by working on tools for carrying out ensemble statistics “in-flight” and on tools to hide storage complexity and deliver portable workflows. There were several components to that work: the largest of which has been the development of the Earth System Data Middleware (ESDM), an activity led by Prof Julian Kunkel. The others were mainly led by me, and are the main thrust of the ExCALIData work, although we will be doing some comparisons of other technologies with the ESDM.

An Introduction to ExCALIData

Presentation: pdf (1.6 MB)

ExCALIData aims to address the end-to-end workflow for (large)data in, simulation, (larger)data out, analysis, store workflows. We designed two complementary projects to address the two goals in the call with six main activities (three to each project) and one joint work package on knowledge-exchange. (They were designed in such a way that only one could have been funded, but we could get synergy from having two.)

The six work packages address

For the active storage work the key idea is to deploy something actually usable by scientists and build two demonstrator storage systems which deliver the active storage functionality.

Not surprisingly, this is a complex project, and we needed a lot of partners, in this case: DDN and StackHPC to help us with the storage servers and the University of Cambridge to help us with some of the more technical hardware activities (especially the work on fabric, solid state, storage, and the benchmarking of comparative solutions).

- Data Systems at Scale, Mid-Term Update

- our work on ensemble handling. Amongst other activities we have demonstrated “in-flight ensemble data processing” with a large high resolution ensemble (10 member global 25 km resolution using 50K cores).

- progress with the Earth System Data Middleware (ESDM), which includes NetCDF interface and some new backends, and

- work by our industry colleagues, Seagate and DDN both on ESDM backends and on new active storage systems which will be able to do simple manipulations “in storage”, and

- our work on S3NetCDF, a drop in extension for netcdf-python suitable for use with object stores.

Meeting: ESIWACE2 Mid-Term Review

GotoMeeting, October, 2020

Includes the following talks:

Data Systems at Scale

Presentation: pdf (0.5MB)

This was a presentation given as part of the esiwace mid-term review. It describes some of the progress made in one of the work packages, WP4, which is about data systems at scale. Here the definition of “at scale” means “for weather and climate simulation on exascale (and pre-exascale) supercomputing” and for downstream analysis systems.

I have an older post linking to a talk describing the work package objectives.

In this presentation I highlighted

- When (and how) should a simulation be FAIR?

Meeting: Earthcube - What about Data?

Boulder via Hangout, May 2020

Includes the following talks:

When (and how) should a simulation be Fair?

Presentation: pdf (4 MB).

After a reminder about FAIR (Findable, Accessible, Interoperable, Reusable - not Reproducible), I discuss some of the issues about applying these concepts to Simulation Data. Apart from the volume issues, we have to decide just what “Simulation Data” actually means, does it mean the entire workflow, or just the outputs. There are a huge variety of types of simulation, are they all important? Using the ES-DOC vocabularies, I point out that in practice simulations are not meaningfully reproducible - except in trivial cases, and actually it is more important to reproduce experiments - which is of course the heart of model intercomparison. At CEDA we have two decades of experience curating data, and we now use the JASMIN platform to provide a data commons to make data accessible. Lots of simulation data is analysed on JASMIN, alongside the CEDA archive, but for fifteen years we have made decisions about whether to archive simulation data based on (what was then) the BADC Numerical Model Data Policy. The key insight in developing that policy was that it’s relatively easy to decide what’s important, and what’s not important, but there is a lot of middle ground for which value judgements are important. We developed polices to decide on the important and not important data, and to guide the value judgements, and these are summarised here. Underlying all decisions are questions about affordabilty, and whether or not adequate metadata can be (or will be) produced. Without such metadata curation of simulation data becomes pointless. The bottom line is that not all simulation data should be FAIR, but that which needs to be FAIR needs to be well documented. Money matters as simulation data at scale is incredibly expensive.

- Challenges facing the modelling community

Meeting: The UM User's Workshop 2019 - Next Generation Modellin Systems

Exeter, June, 2019

Includes the following talks:

Challenges facing the modelling community

Presentation: pdf (8 MB)

This was a talk given to set the scene for the Next Generation Modelling System sessions of the 2019 Unified Model (Um) Users workshop - a meeting of the international UM partnership bringing together indivudals from the US, Korea, Australia, NZ, and the UK.

It was a shortened version of my seminar on the end of climate modelling, where I was aiming to explain to some of the attendees why the radical changes in model structure coming with our next generation LFRic model are necessary given some of the technologies in play. I was able to omit some of the key parts of the seminar because there were other speakers addressing those subjects (e.g. Rupert Ford was up later talking about Domain Specific Languages).

- Data Systems at Scale

- portability, to ensure a wider range of stakeholders,

- containerisation, to deliver as much as possible within userspace, and

- vendor engagement alongside open-source products, allowing differentiation in the market and realistic business models for those developing customised high-performance storage solutions.

Meeting: ESIWACE2 Kick-Off

Hamburg, March, 2019

Includes the following talks:

Data Systems at Scale

Presentation: pdf (0.5MB)

This was a presentation given as part of the esiwace2 kickoff. It describes one of the work packages, WP4, which is about data systems at scale. Here the definition of “at scale” means “for weather and climate simulation on exascale (and pre-exascale) supercomputing” and for downstream analysis systems. We expect I/O performance and data volumes will limit their exploitation without new storage and analysis libraries.

This work package describes an ambitious programme of work, building on lessons learnt from ESiWACE. It will address a range of issues in workflow, from modifications to a workflow engine (cylc) and a scheduler (slurm), to new I/O libraries and data handling tools. Some integration with pangeo is likely. There is a key role for ensemble analysis tools to mitigate aqainst writing data in the first place.

A key goal will be to deploy these new tool in real workflows, within the project, and elsewhere, and to develop them in a way that has a credible path to sustainability. In practice deployability is likely to involve three key facets:

The work package is being led from the University of Reading (Computer Science, and NCAS), with partners from CNRS-IPSL, STFC, Seagate, DDN, DKRZ, ICHEC, and the Met Office.

(See mid-term update for an update on progress.)

- The end of Climate Modelling as we know it

- More use of hierarchy of models;

- Precision use of resolution;

- Selective use of complexity;

- Less use of “ensembles of opportunity”, much more use of “designed ensembles”;

- Duration and Ensemble Size : thinking harder a priori about what is needed;

- Much more use of emulation.

Meeting: NCAS Seminar

Reading, March, 2019

Includes the following talks:

The end of Climate Modelling as we know it

Presentation: pdf (15 MB)

This was a seminar given in Meteorology at the University of Reading as part of the NCAS seminar series.

In this presentation I was aiming to introduce a meteorology and climate science community to the upcoming difficulties with computing: from the massive explosion in hardware variety that we associate with the end of Moore’s Law, to the fact that in about a decade we may only be able to go faster by spending more money on power (provided we have the parallelisation). Similar problems with storage arising from both costs and bandwidth are coming …

I set this in the scene of our existing experience of decades of unbridled increases in computing at more or less the same power consumption until the last decade when we’ve still had more compute, but at the same power cost per flop (for CPUs at least).

Of course it wont mean the end of climate science, and it will probably take longer to arrive than currently being predicted, but it does mean a change in the way we organise ourselves, in terms of sharing data and experiment design, and it certainly means “going faster” will require being smarter, with maths, AI etc.

This seminar differs from my last one in that I didn’t talk about JASMIN at all, and I spent more time on the techniques we will need to cross the chasm between science code and the changing hardware.

I finished with some guidance for our community: we will need to become better at using the right tool(s) for the job, rather than treating everything as a nail because we have a really cool hammer:

- Supercomputers are no longer all the same and it will get worse

- Climate modelling for computer scientists, to get across the size of the computational and data handling problems, leading to

- The changing way we deal with simulation data - from “download” to “remote access”, and the need for

- New data analysis supercomputers, and in particular, JASMIN.

- A discussion of Moore’s Law, and it’s demise, and

- How we can still make progress in (climate) science with new maths (including AI and ML), new algorithms, new programming techniques, and new ways of working with data.

Meeting: Computer Science Seminar

Reading, February, 2019

Includes the following talks:

Supercomputers are no longer all the same and it will get worse; a climate modelling perspective

Presentation: pdf (30 MB)

This was a seminar given in Computer Science at the University of Reading. I was aiming to kick the ball through a few balls at once, being introductions to

From there I switched gear to

The bottom line I wanted to get across to this audience was that there is lots of scope for computer science, and lots of interesting things to do.

- Trends and Context for Environmental HPC

- How does our science face a future where computers do not run faster? Are there known algorithmic routes to improve time to solution for fixed FLOPs (but maybe enhanced parallelism) that we are not yet exploring? What else can we do?

- Which HPC related international collaborations do we depend upon, and how can we sustain these, intellectually and financially?

- What are the implications of the new UKRI funding routes (e.g. Industrial Strategy Challenge Fund) for environmental HPC resource requirements (software, hardware, people)?

- How do we gather information about the impact of our HPC related research? What are the routes to demonstrating economic impact?

- The NERC HPC recurrent budget is currently fixed, but there is usage growth via both growth within the existing communities, and the addition of new communities.

- Future computing will not be any faster than current computing, we will only get to do-more/go-faster by expending energy (electricy) and by being smarter about using parallelism, by algorithms, or software, or both.

- HPC platforms are changing, with more heterogeneity and customisation, and that’s happening just as we have to take on machine learning as a new tool.

- NERC needs plans and evidence to justify HPC infrastructure, now and into the future, especially if we want to grow it!

- We have ambitious international scientific competitors — both a threat (if we can’t compete computationally) and an opportunity (for collaboration)

- All of which is why NERC needs a strategy …

Meeting: NERC HPC Strategy

London, November, 2018

Includes the following talks:

Trends and Context for Environmental HPC

Presentation: pdf (7 MB)

This was the kickoff talk to a NERC “town hall meeting” workshop envisaged as part of the establishment of a formal NERC HPC strategy. After my talk, we had a three component workshop: some international talks, some breakout discussions, and some local talks. The main aim of the meeting was

To begin a process of establishing and maintaining a NERC strategy for high performance computing which reflects the scientific goals of the UK environmental science community, government objectives, and the need for international competitiveness.

The breakout groups covered four themes:

… and my talk was attempting to provide an introduction and context for these objectives and breakouts. The key point of course is that free lunch of easy (and relatively cheap) computing is over. Advancing along our traditional axes of resolution, complexity, ensembles etc, will be complicated by harder to use and (relatively) more expensive computing and storage.

I finished with six key points:

(Incidentally, the preference for lots of post-it notes in this presentation is a homage to town-hall meetings past and their flip-charts and “stick your comment on these posters” sesssions! EPSRC, I’m looking at you :-) )

- Beating Data Bottlenecks in Weather and Climate Science

Meeting: Extreme Data Workshop

Jülich, September, 2018

Includes the following talks:

Beating Data Bottlenecks in Weather and Climate Science

Presentation: pdf (6 MB)

The data volumes produced by simulation and observation are large, and becoming larger. In the case of simulation, plans for future modelling programmes require complicated orchestration of data, and anticipate large user communities. “Download and work at home” is no longer practical for many applications. In the case of simulation these issues are exacerbated by users who want simulation data at grid point resolution instead of at the resolution resolved by the mathematics, and who design numerical experiments without knowledge of the storage costs.

There is no simple solution to these problems: user education, smarter compression, and better use of tiered storage and smarter workflows are all necessary - but far from sufficient. In this presentation we introduce two approaches to addressing (some) of these data bottlenecks: dedicated data analysis platforms, and smarter storage software. We provide a brief introduction to the JASMIN data storage and analysis facility, and some of the storage tools and approaches being developed by the ESIWACE project. In doing so, we describe some of our observations of real world data handling problems at scale, from the generic performance of file systems to the difficulty of optimising both volume stored and performance of workflows. We use these examples to motivate the two-pronged approach of smarter hardware and smarter software - but recognise that data bottlenecks may yet limit the aspirations of our science.

(At at a workshop organised by Martin Schultz at Jülich)

- Building a community hydrological model

Meeting: Open Meeting for Hydro-JULES - Next generation land-surface and hydrological predictions

Wallingford, September, 2018

Includes the following talks:

Building a Community Hydrological Model

Presentation: pdf (9 MB)

In this talk we introduce how NCAS will be supporting the Hydro-Jules Project by designing and implmenting the modelling framework and building and maintaing and archive of driving data, model configurations, and supporting datasets. We will also be providing training and support for the community on the JASMIN supercomputer.

We also discuss some of the issue that HydroJules will need to prepare for, including the impending change to the UK Unified Modelling modelling framework and exascale computing.

- The Changing Nature of JASMIN

Meeting: JASMIN User Conference

Milton, June, 2018

Includes the following talks:

The Changing Nature of JASMIN

Presentation: pdf (9 MB)

This talk was part of a set of four to give attendees at the JASMIN user conference some understanding of the recent and planned changes to the physical JASMIN environment.

The introduction covers a logical and schematic view of the JASMIN system and why it exists, before three sections covering the compute usage, data movement, and storage growth over recent years. JASMIN shows nearly linear growth in total users, active users with data acess and active users of both the LOTUS batch cluster and the interactive generic science machines. Despite the changing size of the batch cluster (it has grown in size) we have managed to keep utilisation in the target 60-80% range (we have deliberately targeted a lower utilisation rate so as to allow the use of batch to be more immediate, given that keeping the data online is the more expensive part of this system). Usage of the managed cloud systems has been substantial, and the cloud itself has grown, targetting more customised solutions for a wide array of tenants. External cloud usage has been relatively low, which reflects the lack of elasticity and its usage for primarily pets rather than cattle. Where JASMIN is really unique however is in the amount of data movement invovled in the day to day business, with PB/day being sustained in the batch cluster for significant periods. Archive growth has been capped, but shows some interesting trends, as does the volume of Sentinel data held - and overall growth has been linear despite a range of external constraints and self-limiting behaviours. Elastic tape usage started small, but has become more signficant as disk space constraints have become more of an issue - this despite a relatively poor set of user facing features.

These factors (and others) led to the 2017/18 phase 4 capital upgrade which is being deployed now, with a range of new storage types. Looking forward, it is clear that the “everything on disk” is probably not the right strategy and we have to look to smarter use of tape.

- Climate Data: Issues, Systems and Opportunities

Meeting: Data-Intensive weather and climate science

Exeter, June, 2018

Includes the following talks:

Climate Data: Issues, Systems, and Opportunities

Presentation: pdf (25 MB)

The aim of this talk was to introduce students at the Mathematics for Planet Earth summer school in Exeter to some of the issues in data science. I knew I would be following Wilco Hazeleger who was talking on Climate Science and Big Data, so I didn’t have to hit all the big data messages.

My aim was to cover some of the usual isues around heterogeneity and tools, but really cover some of the upcoming volume issues, using as many real numbers as I could. One of the key points I was making was that as we go forward in time, we are moving from a science dominated by the difficulty of simulation to one that will be dominated by the difficulty of data handling - and that problem is really here now, although clearly the transition to exascale will involve problems with both data and simulation. I also wanted to get across some o fthe difficulties associated with next generation model intercomparison - interdependency and data handling - and how those apply to both the modellers themselves as well as the putative users of the simulation data.

The 1km model future is interesting in terms of data handling. I made a slightly preposterous extrapolation (an exercise for the reader is to work out what is preposterous) … but only to show the potential scale of the problem, and the many opportunities for doing something about it.

The latter part of the talk covered some of the same ground as my data science talk from March, to give the students a taste of some of the things that can done with modern techniques and (often) old data.

The last slide was aimed at reminding folks that climate science has always been a data science, and actually, always a big data science! Climate data is always large compared to what others are actually handling … and that we have always managed to address the upcoming data challenges. I hope now will be no different.

- Weather and Climate Requirements for EuroHPC

Meeting: EuroHPC Requirements Workshop

Brussels, June, 2018

Includes the following talks:

EuroHPC: Requirements from Weather and Climate

Presentation: pdf (9 MB)

Abstract: pdf

This talk covered requirements for the upcoming pre-exascale and exascale computers to be procured by the EuroHPC project. The bottom line is that Weather and Climate have strong constraints on HPC environnments, and we believe that procurement benchmarks should measure science throughput in science units (in our case Simulated Years Per (real) Day, SYPD). We also recommend that the EuroHPC project takes cognisance of the fact that HPC simulations do not directly generate knowledge, the knowledge comes from analysis of the data products!

- Opportunities and Challenges for Data Science in (Big) Environmental Science

Meeting: Data Sciences for Climate and Environment (Alan Turing Institute)

London, March, 2018

Includes the following talks:

Opportunities and Challenges for Data Science in (Big) Environmental Science

Presentation: pdf (18 MB) (See also video).

I was asked to give a talk on data science in climate science. After working out what “data science” might mean for this audience, I took a rather larger view of what was needed and talked about data issues in environmental science, before quickly talking about hardware and software platform issues. Most of the talk covered a few applications of modern data science: data assimilation, classification, homogenising data, and using machine learning to infer new products. I finished by reminding everyone that in collaborations between climate science and statisticians and computer scientists, we need to be careful about our use of the word “model” (with a bit of help from xkcd). I finished with reminding everyone that climate science has always been a data science.

The full set of videos from all the speakers is available.

- Exploiting Weather & Climate Data at Scale (WP4)

Meeting: ESiWACE General Assembly

Berlin, December, 2017

Includes the following talks:

Exploiting Weather & Climate Data at Scale (WP4)

Presentation: pdf (4 MB)

This was a talk I would have given in partnership with Julian Kunkel, but as I was still at home thanks to a wee bit of cold air causing chaos at LHR, Julian had to give all of it. The version linked here is the version I would have given, the actual version Julian gave will (eventually) be available on the ESIWACE website.

The talk covers the “exploitability” component of the ESIWACE project. The work we describe is development of cost models for exascale HPC storage, plus new software to write and manage data at scale.

- The Data Deluge in High Resolution Climate and Weather Simulation

Meeting: European Big Data Value Forum

Versailles, November, 2017

Includes the following talk:

The Data Deluge in High Resolution Climate and Weather Simulation

Presentation: pdf (5 MB).

This talk was given by Sylvie Joussaume, but we had worked on it together, so I think it’s fair enough to include here. We wanted to show the scale of the data problems we have in climate science, and some of the direction in which we are moving with respect to “big data” technologies and algorithms.

- Data Interoperability and Integration - A climate modelling perspective.

- Mobilising community support and advice for discipline-based initiatives to develop online data capacities and services;

- Priorities for work on interdisciplinary data integration and flagship projects;

- Approaches to funding and coordination; and

- Issues of international data governance.

Meeting: Science and the Digital Revolution - Data, Standards, and Integration

Royal Society, London, November, 2017

Includes the following talk:

Data Interoperability and Integration: A climate modelling perspective

Presentation: pdf (11.5 MB).

I was asked to give a talk at a CODATA meeting which was aimed at developing a roadmap for:

For this talk I was asked to address an example from the WMO research community on what we have accomplished in standardising a range of things, and reflecting on what has worked/failed and why. I wasn’t given much time to prepare, so this is what they got!

- Performance, Portability, Productivity - Which two do you want?

Meeting: Gung Ho Network Meeting

Exeter University, July 2017

Includes the following talk:

Performance, Portability, Productivity: Which two do you want?

Presentation: pdf (4 MB).

I talked about two papers that I’ve recently been involved with: “CPMIP: measurement of real computational performance of Earth System Models in CMIP6” (which appeared in early 2017) and “Crossing the Chasm: How to develop weather and climate models for next generation computers?” which at the time was just about to be submitted to GMD.

- The UK JASMIN Environmental Commons

- The UK JASMIN Environmental Commons - Now and into the Future

Meeting: International Supercomputing (ISC) and JASMIN User Conferences

Frankfurt and Didcot, June 2017

Includes the following talks:

I gave two versions of this talk, one at at the International Supercomputing Conference’s Workshop on HPC I/O in the data centre, and one at the 2017 JASMIN User’s Conference.

The talk covered the structure and usage of JASMIN, showing there is a lot of data movement both in the batch system and the interactive environment. One key observation was that we cannot afford to carry on with parallel disk, and we don’t think tape alone is a solution, so we are investigating object stores, and object store interfaces.

The UK JASMIN Environmental Commons

Presentation: pdf (12 MB - the ISC version).

The UK JASMIN Environmental Commons: Now and into the Future

Presentation: pdf (12 MB - the JASMIN user conference version).

- Data Centre Technology to Support Environmental Science

Meeting: NERC Town Hall Meeting on Data Centre Futures

London, October, 2016

Includes the following talk:

Data Centre Technology to Support Environmental Science

Presentation: pdf (18 MB).

This was a talk given at a NERC Town Hall meeting on the future of data centres in London, on the 13th of October 2016. My brief was to talk about underlying infrastructure, which I did here by discussing the relationship between scientific data workflows and the sort of things we do with JASMIN.

- Computer Science Issues in Environmental Infrastructure

Meeting: Meteorology meets Computer Science Symposium

University of Reading, September 2016

Includes the following talk:

Computer Science Issues in Environmental Infrastructure

Presentation: pdf (14 MB).

This was a talk at a University of Reading symposium held with Tony Hey, Geoffrey Fox and Jeff Dozier as guest speakers as part of the introduction o the new Computer Science Department in the Reading University School of Mathematical, Physical and Computer Sciences SMPCS.

The main aim of my talk was to get across the wide range of interesting generic science and engineering challenges we face in delivering the infrastructure needed for environmental science.

- Science Drivers - Why JASMIN?

Meeting: JASMIN User Conference

RAL, June, 2016

Includes the following talk:

Science Drivers: Why JASMIN?

Presentation: pdf (19 MB).

Keynote scene setter for the inaugural JASMIN user conference: how the rise of simulation leads to a data deluge and the necessity for JASMIN, and a programme to improve our data analysis techniques and infrastructure.

- A ten minute introduction to ES-DOC technology

Meeting: CEDA Vocbulary Meeting

RAL, March, 2016

Includes the following talk:

A ten minute introduction to ES-DOC technology

Presentation: pdf (2 MB).

A brief introduction to some of the basic tools being use to define ES-DOC CIM2 and the CMIP6 extensions.

- UK academic infrastructure to support (big) environmental science

Meeting: International Computing in Atmospheric Science (ICAS)

Annecy, September, 2015

Includes the following talk:

UK academic infrastructure to support (big) environmental science

Presentation: pdf (18 MB).

Abstract: Modern environmental science requires the fusion of ever growing volumes of data from multiple simulation and observational platforms. In the UK we are investing in the infrastructure necessary to provide the generation, management, and analysis of the relevant datasets. This talk discusses the existing and planned hardware and software infrastructure required to support the (primarily) UK academic community in this endeavour, and relates it to key international endeavours at the European and global scale – including earth observation programmes such as the Copernicus Sentinel missions, the European Network for Earth Simulation, and the Earth System Grid Federation.

- Why Cloud? Earth Systems Science

Meeting: RCUK Cloud Workshop

Warwick, June, 2015

Includes the following talk:

Why Cloud? Earth Systems Science

Presentation: pdf (6 MB).

Alternative title: Data Driven Science: Bringing Computation to the Data. This talk covered the background trends and described the JASMIN approach.

- Beating the tyranny of scale with a private cloud configured for Big Data

Meeting: EGU

Vienna, April, 2015

Includes the following talk:

Beating the tyranny of scale with a private cloud configured for Big Data

Presentation: pdf (5 MB).

At the last minute I found that I wasn’t able to attend, but Phil Kershaw gave my talk. The abstract is available here (pdf).

- It starts and Ends with Data- Towards exascale from an earth system science perspective

- Bringing Compute to the Data

Meeting: Big Data and Extreme-Scale Computing (BDEC)

Barcelona, January, 2015

Includes the following talks:

There were two back to back meetings organised as part of the 2015 Big Data and Extreme Computing meeting website. In the first, organised as part of the European Exascale Software Initiative (EESI), I gave a full talk, in the second, I provided a four page position paper with a four page exposition.

It starts and Ends with Data: Towards exascale from an earth system science perspective

Presentation: pdf (7 MB).

Six sections: the big picture, background trends, hardware issues, software issues, workflow, and a summary.

Bringing Compute to the Data

Presentation: pdf (3 MB).

This was my main BDEC contribution. There was also a four page summary paper: pdf.

- Leptoukh Lecture - Trends in Computing for Climate Research

Meeting: AGU Fall Meeting

San Francisco, December, 2014

Includes the following talk:

I was honoured to be the third recipient of the AGU Leptoukh Lecture awarded for significant contributions to informatics, computational, or data sciences.

Trends in Computing for Climate Research

Presentation: pdf (30 MB).

Abstract:

The grand challenges of climate science will stress our informatics infrastructure severely in the next decade. Our drive for ever greater simulation resolution/complexity/length/repetition, coupled with new remote and in-situ sensing platforms present us with problems in computation, data handling, and information management, to name but three. These problems are compounded by the background trends: Moore’s Law is no longer doing us any favours: computing is getting harder to exploit as we have to bite the parallelism bullet, and Kryder’s Law (if it ever existed) isn’t going to help us store the data volumes we can see ahead. The variety of data, the rate it arrives, and the complexity of the tools we need and use, all strain our ability to cope. The solutions, as ever, will revolve around more and better software, but “more” and “better” will require some attention.

In this talk we discuss how these issues have played out in the context of CMIP5, and might be expected to play out in CMIP6 and successors. Although the CMIPs will provide the thread, we will digress into modelling per se, regional climate modelling (CORDEX), observations from space (Obs4MIPs and friends), climate services (as they might play out in Europe), and the dependency of progress on how we manage people in our institutions. It will be seen that most of the issues we discuss apply to the wider environmental sciences, if not science in general. They all have implications for the need for both sustained infrastructure and ongoing research into environmental informatics.

- Weather and Climate modelling at the Petascale - Achievements and perspectives. The roadmap to PRIMAVERA

- Infrastructure for Environmental Supercomputing - Beyond the HPC!

Meeting: Symposium on HPC and Data-Intensive Apps

Trieste, November, 2014

Includes the following talks:

Or to give it it’s full name: Symposium on HPC and Data-Intensive Applications in Earth Sciences: Challenges and Opportunities@ICTP, Trieste, Italy.

I gave two talks at this meeting, the first in the HPC regular session, on behalf of my colleague Pier Luigi Vidale, on UPSCALE, the second a data keynote on day two.

Weather and Climate modelling at the Petascale: achievements and perspectives. The roadmap to PRIMAVERA

Presentation: pdf (37 MB).

Abstract:

Recent results and plans from the Joint Met Office/NERC High Resolution Climate Modelling programme are presented, along with a summary of recent and planned model developments. We show the influence of high resolution on a number of important atmospheric phenomena, highlighting both the roles of multiple groups in the work and the need for further resolution and complexity improvements in multiple models. We introduce plans for a project to do just that. A final point is that this work is highly demanding of both the supercomputing and subsequent analysis environments.

Infrastructure for Environmental Supercomputing: beyond the HPC!

Abstract:

We begin by motivating the problems facing us in environmental simulations across scales: complex community interactions, and complex infrastructure. Looking forward we see the drive to increased resolution and complexity leading not only to compute issues, but even more severe data storage and handling issues. We worry about the software consequences before moving to the only possible solution, more and better collaboration, with shared infrastructure. To make progress requires moving past consideration of software interfaces alone to consider also the “collaboration” interfaces. We spend considerable time describing the JASMIN HPC data collaboration environment in the UK, before reaching the final conclusion: Getting our models to run on (new) supercomputers is hard. Getting them to run perfomantly is hard. Analysing, exploiting and archiving the data is (probably) now even harder!

Presentation: pdf (22 MB )

- JASMIN - A NERC Data Analysis Environment

Meeting: NERC ICT Current Awareness

Warwick, October, 2014

Includes the following talks:

JASMIN - A NERC Data Analysis Environment

Presentation: pdf (18MB).

The talk basically covered an explanation of what JASMIN actually consists of, and provides, and it’s relationship to the Cloud. It included some discussion of why JASMIN exists in the context of the growing data problem in the community.

- The influence of Moore's Law and friends on our computing environment!

Meeting: NCAS Science Meeting

Bristol, July, 2014

Includes the following talks:

The influence of Moore’s Law and friends on our computing environment!

Presentation: pdf (19 MB).

I gave a talk on how Moore’s Law and friends are influencing atmospheric science, the infrastructure we need, and how we trying to deliver services to the community.

- Environmental Modelling at both large and small scales: How simulating complexity leads to a range of computing challenges

- The road to exascale for climate science: crossing borders or crossing disciplines, can one do both at the same time?

- JASMIN: the Joint Analysis System for big data

Meeting: e-Research NZ

Hamilton, June/July, 2014

Includes the following talks:

I gave three talks at this meeting:

Environmental Modelling at both large and small scales: How simulating complexity leads to a range of computing challenges

Presentation: pdf (2 MB).

On Monday, in the HPC workshop, despite using the same title I had for the Auckland seminar, I primarily talked about the importance of software supporting collaboration, using coupling as the exemplar (reprising some of the material I presented in Boulder in early 2013).

The road to exascale for climate science: crossing borders or crossing disciplines, can one do both at the same time?

Presentation: pdf (9 MB)

On Tuesday I gave the keynote address:

Abstract: The grand challenges of climate science have significant infrastructural implications, which lead to requirements for integrated e-infrastructure - integrated at national and international scales, but serving users from a variety of disciplines. We begin by introducing the challenges, then discuss the implications for computing, data, networks, software, and people, beginning from existing activities, and looking out as far as we can see (spoiler alert: not far!)

JASMIN: the Joint Analysis System for big data

pdf (5 MB)

On Wednesday I gave a short talk on JASMIN:

Abstract: JASMIN is designed to deliver a shared data infrastructure for the UK environmental science community. We describe the hybrid batch/cloud environment and some of the compromises we have made to provide a curated archive inside and alongside various levels of managed and unmanaged cloud … touching on the difference between backup and archive at scale. Some examples of JASMIN usage are provided, and the speed up on workflows we have achieved. JASMIN has just recently been upgraded, having originally been designed for atmospheric and earth observation science, but now being required to support a wider community. We discuss what was upgraded, and why.

- The Future of ESGF in the context of ENES and IS-ENES2

- We as a community have too much data to handle, and I mentioned the apocryphal estimate that only 2/3 of data written is read … but I confused folks … that figure applies to institutional data, not data in ESGF …

- That the migration of data and information between domains (see the talk) requires a lot of effort, and that (nearly) no one recognises or funds that effort (kudos to KNMI :-),

- That portals are easy to build, but hard to build right, and maybe we need fewer, or maybe we need more, but either way, they need to both meet requirements in technical functionality, and information (as opposed to data) content.

Meeting: IS-ENES2 Kickoff Meeting

Paris, France, May, 2013

Includes the following talk:

The Future of ESGF in the context of ENES and IS-ENES2

Presentation: pdf (2MB).

I probably tried to do too much in this talk. There were three subtexts:

- Bridging Communities - Technical Concerns for Building Integrated Environmental Models

Meeting: Coupling Workshop (CW2013)

Boulder, Colorado, February, 2013

Includes the following talk:

Bridging Communities: Technical Concerns for Building Integrated Environmental Models

Presentation: pdf (1MB).

- Data, the elephant in the room. JASMIN one step along the road to dealing with the elephant.

Meeting: 2nd IS-ENES Workshop on High-performance computing for Climate Models

Toulouse, France, January, 2013

Includes the following talk:

Data, the elephant in the room. JASMIN one step along the road to dealing with the elephant.

Presentation: pdf (2MB).

- Issues to address before we can have an open climate modelling ecosystem

Meeting: AGU Fall meeting

San Francisco, December, 2012

Includes the following talks:

Issues to address before we can have an open climate modelling ecosystem

Presentation: pdf (2 MB).

Authors: Lawrence, Balaji, DeLuca, Guilyardi, Taylor

Abstract Earth system and climate models are complex assemblages of code which are an optimisation of what is known about the real world, and what we can afford to simulate of that knowledge. Modellers are generally experts in one part of the earth system, or in modelling itself, but very few are experts across the piste. As a consequence, developing and using models (and their output) requires expert teams which in most cases are the holders of the “institutional wisdom” about their model, what it does well,and what it doesn’t. Many of us have an aspiration for an open modelling ecosystem, not only to provide transparency and provenance for results, but also to expedite the modelling itself. However an open modelling ecosystem will depend on opening access to code, to inputs, to outputs, and most of all, on opening the access to that institutional wisdom (in such a way that the holders of such wisdom are protected from providing undue support for third parties). Here we present some of the lessons learned from how the metafor and curator projects (continuing forward as the es-doc consortium) have attempted to encode such wisdom as documentation. We will concentrate on both technical and social issues that we have uncovered, including a discussion of the place of peer review and citation in this ecosystem.

(This is a modified version of the abstract submitted to AGU, to more fairly reflect the content given the necessity to cut material to fit into the 15 minute slot available.)

- Exploiting high volume simulations and observations of the climate

Meeting: ESA CCI 3rd Colocation Meeting

Frascati, September, 2012

Includes the following talks:

Exploiting high volume simulations and observations of the climate

Presentation: pdf (7 MB).

An introduction to ENES and ESGF with some scientific motivation.

- Weather and Climate Computing Futures in the context of European Competitiveness

Meeting: ICT Competitiveness

Trieste, September, 2012

Includes the following talks:

Weather and Climate Computing Futures in the context of European Competitiveness

Presentation: pdf (4 MB).

In this talk I addressed some elements of how climate science interacts with policy and societal competitiveness in the contentxt of extreme climate events etc, but the main body was on the consequences for modelling and underlying infrastructure.

This table drives much of the conversation:

| Key numbers for Climate Earth System Modelling | 2012 | 2016 | 2020 |

|---|---|---|---|

| Horizontal resolution of each coupled model component (km) | 125 | 50 | 10 |

| Increase in horizontal parallelisation wrt 2012 (hyp: weak scaling in 2 directions) |

1 | 6.25 | 156.25 |

| Horizontal parallelization of each coupled model component (number of cores) |

1,00E+03 | 6,25E+03 | 1,56E+05 |

| Vertical resolution of each coupled model component (number of levels) |

30 | 50 | 100 |

| Vertical parallelization of each coupled model component | 1 | 1 | 10 |

| Number of components in the coupled model | 2 | 2 | 5 |

| Number of members in the ensemble simulation | 10 | 20 | 50 |

| Number of models/groups in the ensemble experiments | 4 | 4 | 4 |

| Total number of cores (4x6x7x8x9) (Increase:) |

8,00E+04 (1) |

1,00E+06 (13) |

1,56E+09 (19531) |

| Data produced (for one component in Gbytes/month-of-simulation) |

2,5 | 26 | 1302 |

| Data produced in total (in Gbytes/month-of-simulation) |

200 | 4167 | 1302083 |

| Increase | 1 | 21 | 6510 |

The bottom line in the talk was that to deliver on all of this requires European scale infrastructure, that is, computing AND networks targeted at data analysis as well as data production!

- British experience with building standards based networks for climate and environmental research

- Rethinking metadata to realise the full potential of linked scientific data

- Provenance, metadata, and e-infrastructure to support climate science

Meeting: An Aussie Triumvirate

Canberra and Gold Coast, November, 2010

Includes the following talks:

These were three talks given in Australia during a short trip in November, 2010. I gave one in Canberra, and two on the Gold Coast.

British experience with building standards based networks for climate and environmental research

Presentation: pdf (5 MB)

This was the keynote talk for the Information Network Workshop, Canberra, November, 2010.

The talk covered organisational and technological drivers to network interworking, with some experience from the UK and European context, and some comments for the future.

All the other talks from the meeting are available on the osdm website.

Rethinking metadata to realise the full potential of linked scientific data

Presentation: pdf (3 MB)

Metadata Workshop, Gold Coast, November 2010

This talk begins with an introduction to our metafor taxonomy, and why metadata, and metadata tooling, are important. There is an extensive discussion of the importance of model driven architectures, and plans for extending our existing formalism to support both RDF and XML serialisations. We consider our the observations and measurements paradigm needs extension to support climate science, and discuss quality control annotation.

All the talks from this meeting are available on an [http://ands.org.au/events/metadataworkshop08-11-2010/index.html ANDS website].

Provenance, metadata and e-infrastructure to support climate science

Presentation: pdf (9 MB)

This was a keynote for the Australian e-Research Conference, 2010.

Abstract: The importance of data in shaping our day to day decisions is understood by the person on the street. Less obviously, metadata is important to our decision making: how up to date is my account balance? How does the cost of my broadband supply compare with the offer I just read in the newspaper? We just don’t think of those things as metadata (one persons data is another persons metadata). Similarly, the importance of data in shaping our future decisions about reacting to climate change is obvious. Less obvious, but just as important, is the provenance of the data:who produced it/them, how, using what technique, is the difficulty of the interpretation in anyway consistent with the skills of the interpreter? In this talk I’ll introduce some key parts of the metadata spectrum underlying our efforts to document climate data, for use now and into the future. In particular, we’ll discuss the information modelling and metadata pipeline being constructed to support the currently active global climate model inter-comparison project known as CMIP5. In doing so we’ll touch on the metadata activities of the European Metafor project, the software developments being sponsored by the US Earth System Grid and European IS-ENES projects, and how all these activities are being integrated into a global federated e-infrastructure.

All conference talks are available here.

- Cyberinfrastructure Challenges (from a climate science repository perspective)

Meeting: NSF Cyberinfrastructure for Data

Redmond, USA, September, 2010

Includes the following talks:

Cyberinfrastructure Challenges (from a climate science repository perspective)

Presentation: pdf (2MB).

I gave a very short presentation at this NSF sponsored workshop. See my blog entry for a commentary.

Update (Bryan, 31st January, 2017): The output of this workshop eventually appeared in an NSF Report.

- Software & Data Infrastructure for Earth System Modelling

Meeting: ENES Earth System Modelling Scoping Meeting

Montvillargennes, March, 2010

Includes the following talks:

Software & Data Infrastructure for Earth System Modelling

Presentation: pdf (1 MB).

This meeting was targeted as being the first step in a foresight process for establishing a European earth system modelling strategy. This is the talk on software and data infrastructure prepared for the meeting (authored with Eric Guilyardi and Sophie Valcke).

- Distributed Data, Distributed Governance, Distributed Vocabularies; The NERC DataGrid

Meeting: RDF and Ontology Workshop

Edinburgh, June, 2006

Includes the following talk:

Distributed Data, Distributed Governance, Distributed Vocabularies: The NERC DataGrid

Presentation: pdf ( 6 MB).

The workshop page is available here!

- NERC DataGrid Status

Meeting: NERC e-Science All-Hands-Meeting

AHM, April, 2006

Includes the following talk:

NERC DataGrid Status

Presentation: pdf (5 MB).

In this presentation, I present some of the motivation for the NERC DataGrid development (the key points being that we want semantic access to distributed data with no centralised user management), link it to the ISO TC211 standards work, and take the listener through a tour of some of the NDG products as they are now. There is a slightly more detailed look at the Climate Sciences Modelling Language, and I conclude with an overview of the NDG roadmap.